Productionizing dbt as a Cloud Run Job: Infrastructure Management with Terraform and CI/CD with GitHub Actions - Part 3

This would be the last part of our three-part series to run a dbt job in GCP. In part 1 we went through the setup needed to run a dbt job on our local system. Next, we saw how to create the infrastructure needed for it using Terraform and tested it locally as well.

In this article, we will cover the below GitHub Actions workflows.

- Create a Docker container for the dbt job and push it to the GCP Artifact Registry

- Create the Infrastructure from the Terraform code configured

Initial Setup

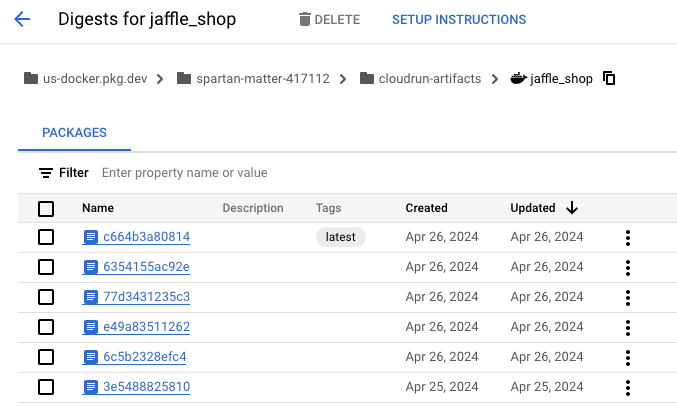

We need to have a repository to store the docker containers. Since a registry could be used across multiple projects, it is better to either create the registry manually or to have a centralized terraform repository to manage the resources that would be reused across multiple projects. In our case, let us create a repository manually, name it cloudrun-artifacts and choose Docker as the format. This would be a repository that could store the docker artifacts for all our cloud-run related jobs/services.

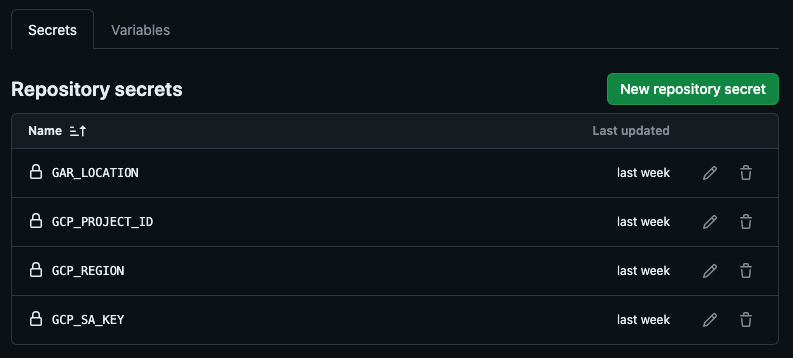

Also, we need a place to store our secrets. We will use the GitHub secrets in our case and store the values like below. The variables would be accessed by GitHub Actions and also passed to Terraform while building the resources. Maintain secrets in GitHub Actions

Create Docker Container

To deploy our dbt job as a CloudRun Job, we first need to create a docker container with our code. Every time we make a change in the code, we need to create a new docker container but also keep track of the changes.

In our project folder create a new folder with the name .github and create a folder named workflows under it. All CI/CD workflows reside in this folder which would be picked up by GitHub Actions.

Let us create a file called docker-build.yml and paste the contents below. This would create a new workflow that performs the following:

- Checkout the code

- Authenticate with GCP with the credentials passed by the Secrets which we configured

- Authenticate Docker for the region

- Build the Docker image with the Dockerfile we have created in Part 1

- Push the Docker container to the Artifactory location.

docker-build.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

name: Build and Push to Artifact Registry

on:

workflow_dispatch:

push:

branches: ["main"]

pull_request:

branches: ["main"]

env:

IMAGE_NAME: jaffle_shop

TAG: latest

jobs:

build-push-artifact:

runs-on: ubuntu-latest

steps:

- name: "Checkout"

uses: actions/checkout@v3

- id: "auth"

name: "Authenticate to Google Cloud"

uses: "google-github-actions/auth@v1"

with:

credentials_json: "$"

create_credentials_file: true

export_environment_variables: true

- name: "Set up Cloud SDK"

uses: google-github-actions/setup-gcloud@v1

- name: "Use gcloud CLI"

run: gcloud info

- name: "Docker auth"

run: |

gcloud auth configure-docker us-docker.pkg.dev

- name: Build image

run: |

docker build . --file Dockerfile --tag $$:$ --build-arg PKG=$

working-directory: .

- name: Push image

run: |

docker push $$:$

Build Terraform Infrastructure

Once we have the Docker container maintained at Artifactory, we can now turn our attention to managing the infrastructure using Terraform.

We already created the Terraform code in the infra folder in our previous step. Now we have to create a new GitHub Actions workflow that triggers Terraform with the code that we have.

Create a new file deploy_infra.yml under the workflows directory and paste the contents below.

deploy_infra.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

name: Deploy to Google Cloud

run-name: "Terraform $"

on:

workflow_dispatch:

inputs:

terraform_operation:

description: "Terraform operation: plan, apply, destroy"

required: true

default: "plan"

type: choice

options:

- plan

- apply

- destroy

- output

env:

IMAGE_NAME: jaffle_shop

TAG: latest

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3.0.0

- id: "auth"

name: "Authenticate to Google Cloud"

uses: "google-github-actions/auth@v1"

with:

credentials_json: "$"

create_credentials_file: true

export_environment_variables: true

- name: Setup Terraform

uses: hashicorp/setup-terraform@v1

- name: Terraform init

run : |

cd infra

terraform init

- name : Terraform Plan

env:

TF_VAR_project_id: $

TF_VAR_region: $

TF_VAR_gar_image: $$:$

run: |

cd infra

terraform plan

if: "$"

- name: Terraform apply

env:

TF_VAR_project_id: $

TF_VAR_region: $

TF_VAR_gar_image: $$:$

run: |

cd infra

terraform apply --auto-approve

if: "$"

- name: Terraform destroy

env:

TF_VAR_project_id: $

TF_VAR_region: $

TF_VAR_gar_image: $$:$

run: |

cd infra

terraform destroy --auto-approve

if: "$"

- name: Terraform outpu

env:

TF_VAR_project_id: $

TF_VAR_region: $

TF_VAR_gar_image: $$:$

run: |

cd infra

terraform output

if: "$"

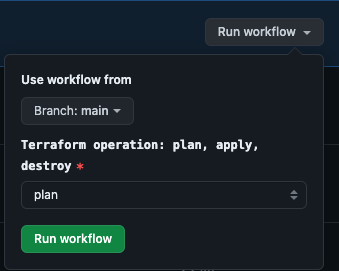

To make things simpler, we are running this workflow automatically. We have configured it to run as a manual task by having the below options.

1

2

3

4

5

6

7

8

9

10

11

12

13

on:

workflow_dispatch:

inputs:

terraform_operation:

description: "Terraform operation: plan, apply, destroy"

required: true

default: "plan"

type: choice

options:

- plan

- apply

- destroy

- output

Starting the workflow from GitHub would give us the option to choose what action needs to be performed. In the production scenario, we could configure this workflow to run the terraform init and terraform plan every time a Pull Request is merged. This would be a simple change in the workflow by removing the workflow_dispatch and adding below.

1

2

pull_request:

branches: ["main"]

Running the workflows

Now that we have both of our workflows created, let us try to trigger them to see if they are working as expected.

Since we have configured the docker-build.yml to run every time as push or merge to main, and since we have made some push to main while creating the code, we should see some docker containers created already in Artifactory.

And yes, we could see the containers in Artifactory and the recent one is tagged as ‘latest’. We could also tag the git commit ID as a tag in our workflow to keep track.

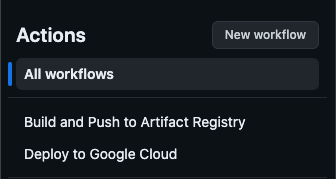

Now that we have a Docker container available at Artifactory, we could run our second workflow to create the Terraform resources.

We go to the GitHub website, choose our repository, and click “Actions”. Here we should be able to see our 2 GitHub Actions.

Choose the “Deploy to Google Cloud” action and click on “Run workflow” on the right. We would have the option to choose what terraform operation we would like to perform between plan, apply and destroy.

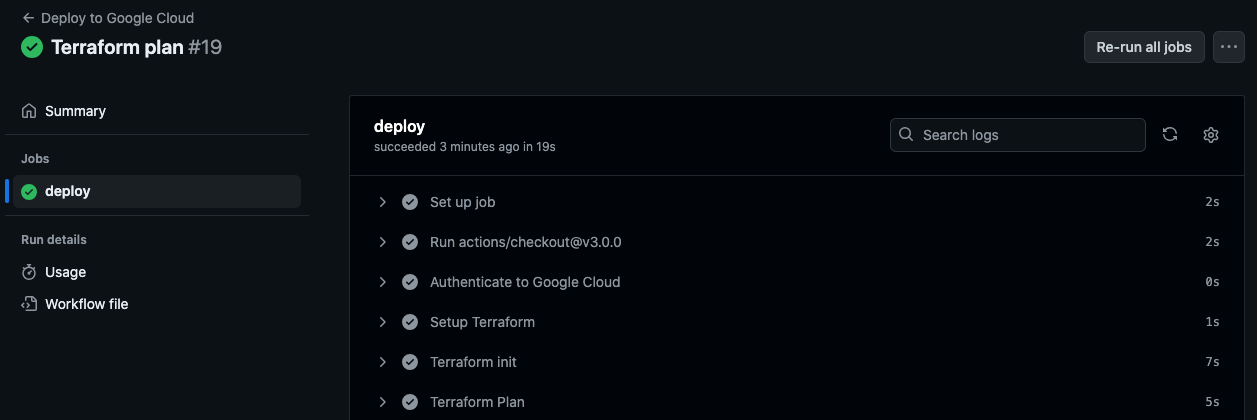

Let us choose “plan”, and run the workflow.

We could see that workflow is successful.

We can check the Terraform Plan logs to see the resources that would be created. We could see the same logs similar to what we saw when we ran the code from our local.

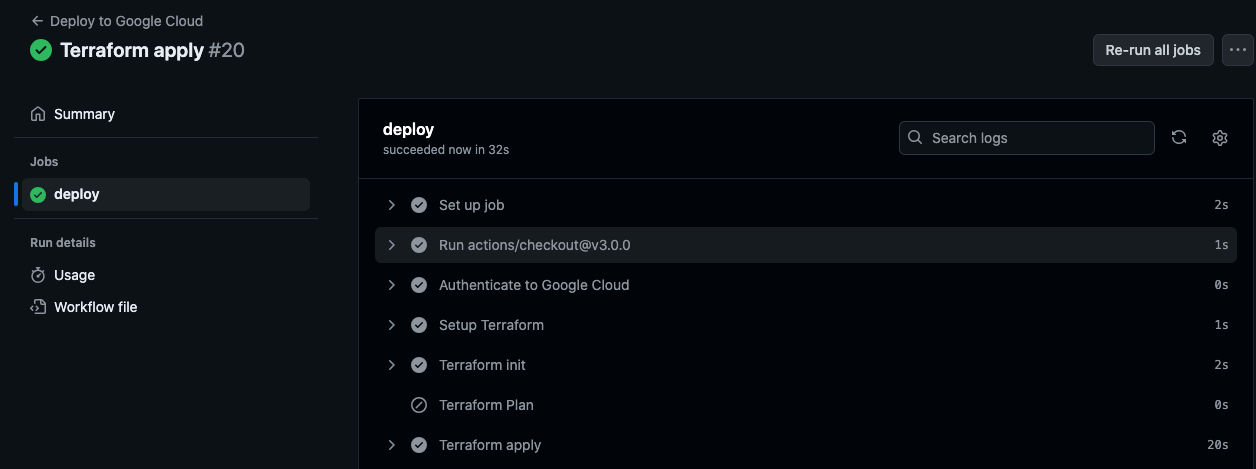

Now, let us run the workflow again and choose “apply” this time to create the resources.

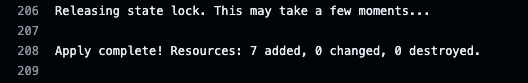

The workflow is completed successfully.

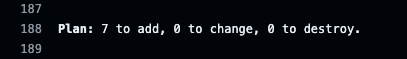

Let us check the logs of the “Terraform Plan” step to confirm. We could see that the 7 resources are created.

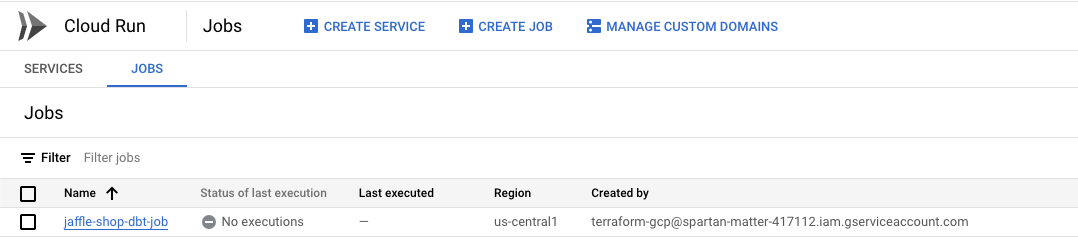

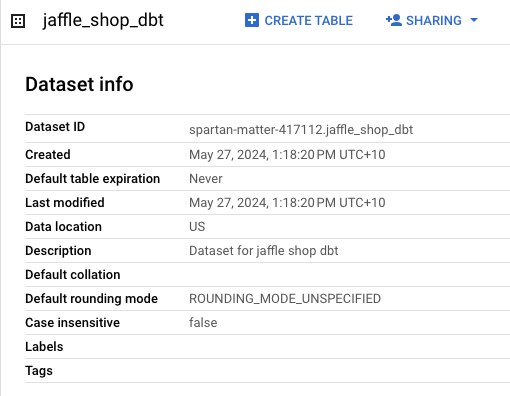

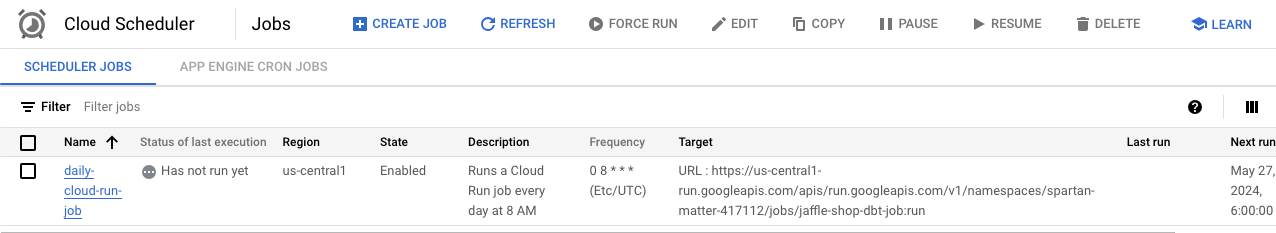

Let us confirm if the resources are created in GCP by checking the console.

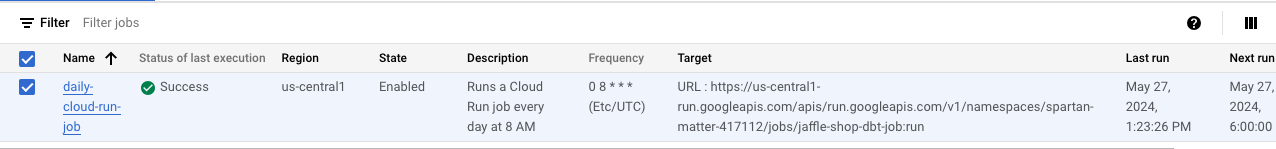

Let us try to run the job manually and see if it runs end-to-end. From the Scheduler, click on the job and click “Force Run”.

Now we could the value changed to “Success” for the field “Status of last execution”

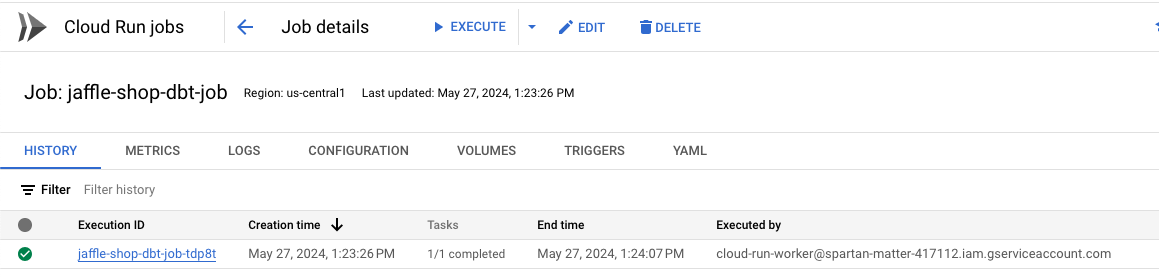

Now we can check the Cloud Run Job and click on the “History” tab.

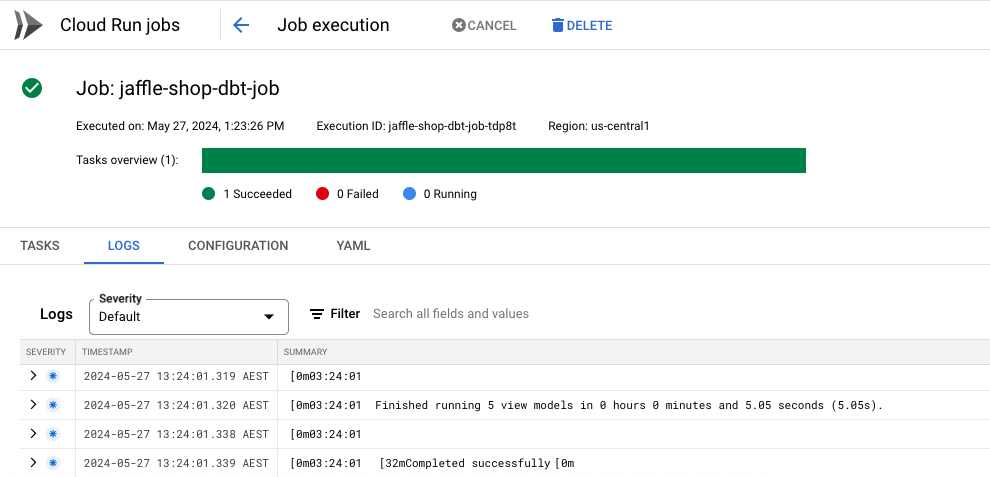

Clicking on the execution and choosing “Logs” would show us the dbt logs in our case. Checking the logs shows that 5 models have been created and it is successful.

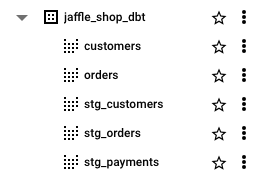

Opening BigQuery, let us see if the 5 views are created.

With this, we have to come to an end. It was a long journey and thank you for joining me on this ride.

In the future, I will try to expand this sample project by adding additional features and functionalities if possible.

Please add in the comments for any ideas to expand this.