BigQuery Data Transfer Service: What, why and how?

In this article let us uncover the BigQuery Data Transfer service, what is it, when to use it, what its limitations are and a sample terraform code to deploy one onto GCP.

What is BigQuery Data Transfer Service

According to Google, BigQuery Data Transfer Service is used to automate data movements from sources like Cloud Storage, AWS S3, Redshift etc onto BigQuery.

The list of supported data sources might change over time. Check out the document for up-to-date information.

When to use it?

You might wonder if GCP has many such tools for bringing data from other sources onto BigQuery, then what reasoning that the Data Transfer service have?

From my understanding, the BigQuery Data Transfer service is best suited if you don’t want to do any data transformation, load the data as-is from the source to the BigQuery tables and are fine to run it on a scheduled basis. It is also a code-free option (Ahem! If you want to deploy it to production using any IaC tools like Terraform, then you need to have a code written for it).

Known Limitations

- You only have a handful of services to choose from. If the connector you are looking for is not on the list, then either you need to create a custom connector or choose other GCP services.

- As already mentioned, you cannot perform any data transformations to the source data (even column name change).

- You can only run it on a schedule or on-demand. You cannot configure it to run based on an event (like when a file is placed in the GCS bucket) or a trigger (a PubSub message). It can only be a time-based trigger.

- You can only use this service to bring data onto BigQuery. This doesn’t support moving data out of BigQuery.

- From the source files, you can only append or overwrite the BigQuery table. There is no option to upsert. If your source file might contain duplicate rows then we would end up with duplicate rows at the destination table.

Using BigQuery Data Transfer Service with GCP Console

Let us explore the BigQuery Data Transfer Service from the GCP Console. For us to start we need the below:

- A GCS bucket in which we would place the source files. The BQ Data Transfer service would be picking the files from this bucket.

- A target BigQuery table to which our BQ Data Transfer service would write to.

For the data, I downloaded the customers-1000.csv dataset and split the files into 10 CSV files each having 100 customers.

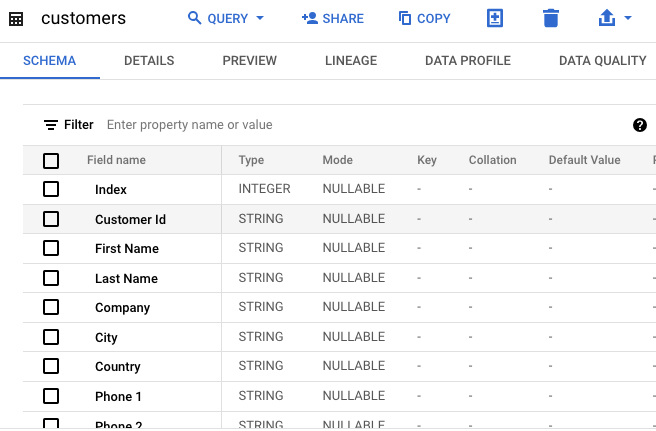

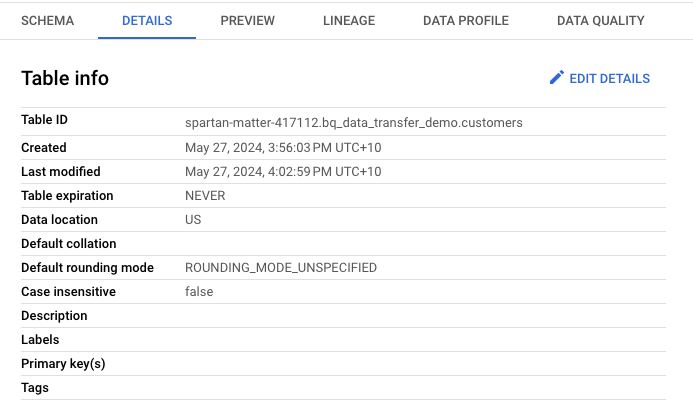

Let us create a “customers” table to hold the sample dataset.

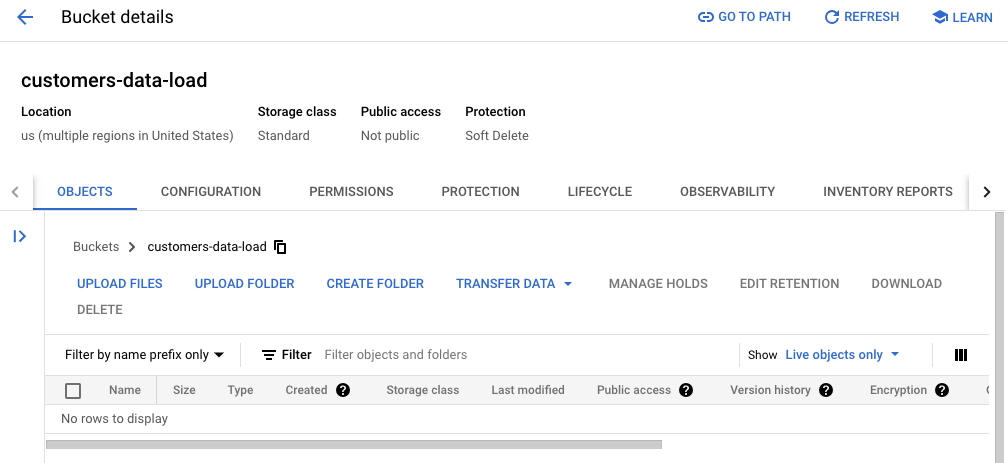

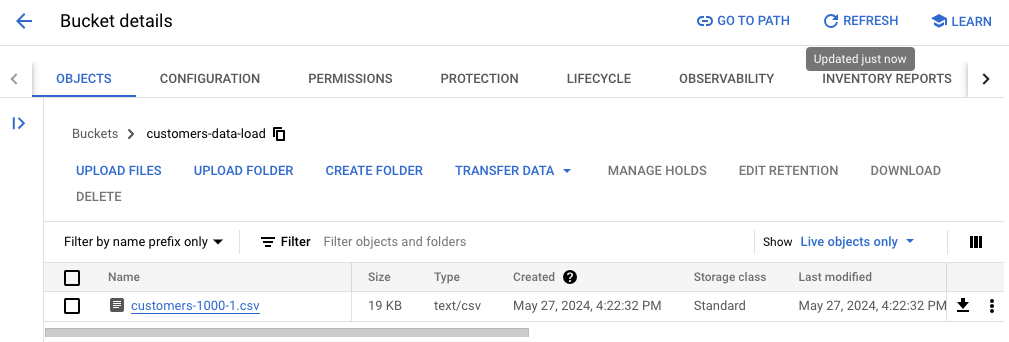

We will also create the GCS Bucket needed.

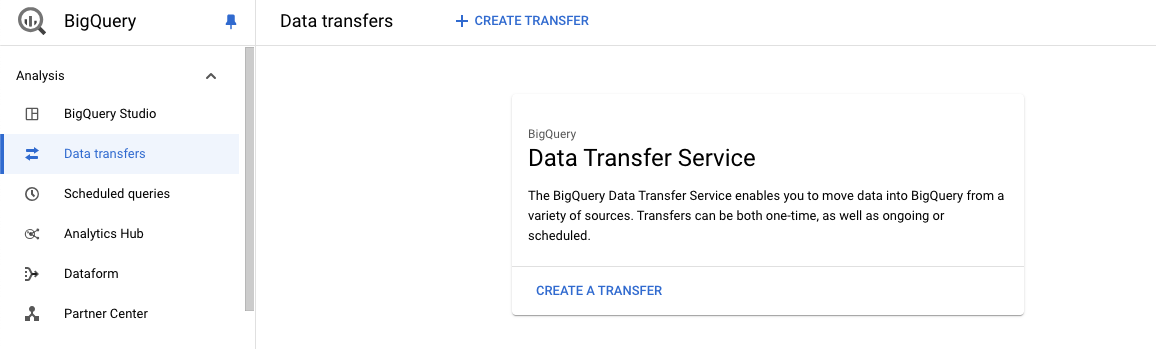

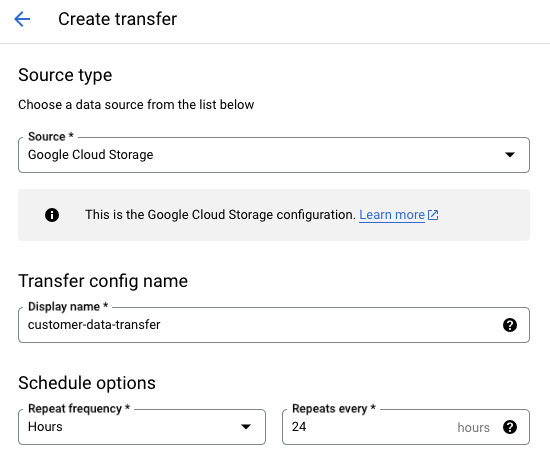

Now let us create a new Data Transfer Service. Go to the BigQuery console, choose “Data Transfers” and click on “Create a Transfer”.

Choose the source as “Google Cloud Storage” and give a name for our service. Also, we have configured it to run every 24 hours.

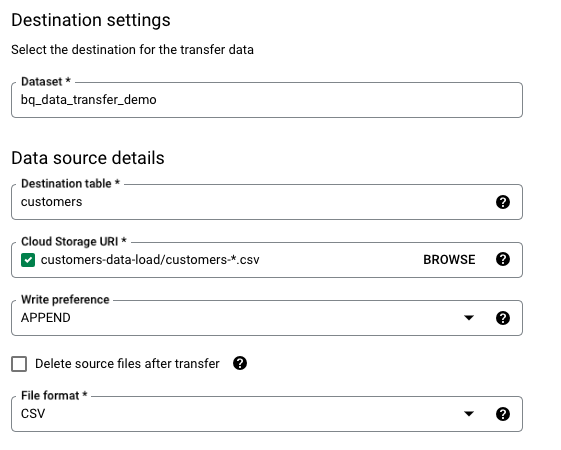

Select the dataset and table we have created earlier. Also, choose the Cloud Storage URI to pick the files with the pattern “customers-*.csv” and choose the mode “Append”.

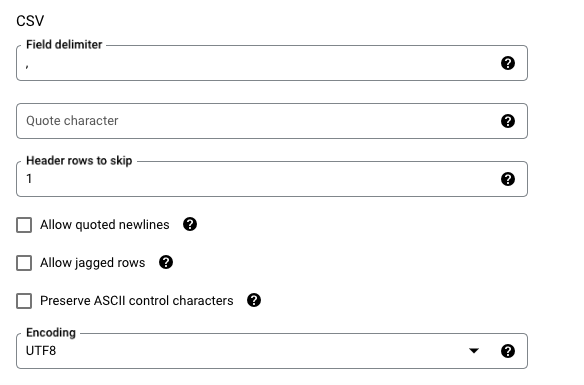

Our file has headers in the first row and we need to skip that.

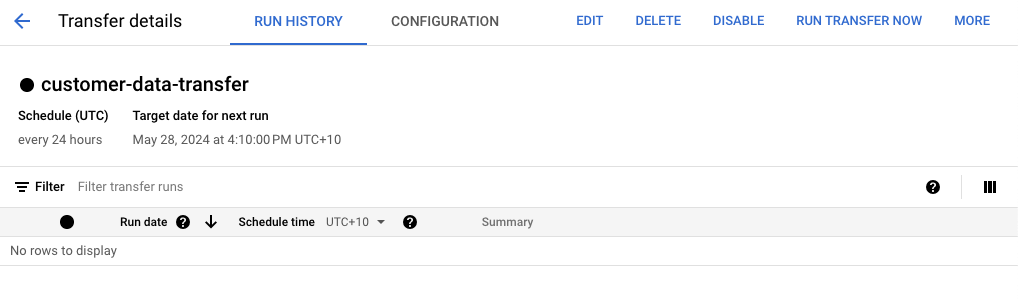

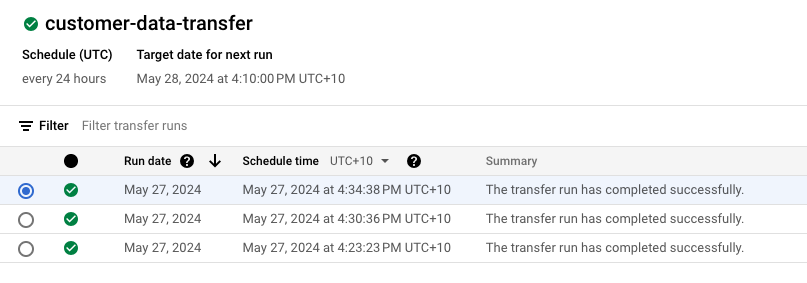

We have our Data Transfer Service created.

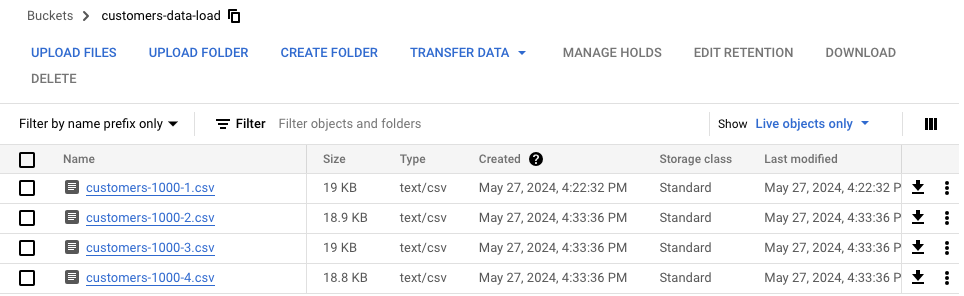

Upload a file manually to the GCS bucket.

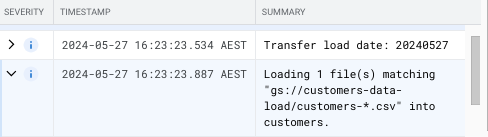

Now let us manually run the service and check the logs. We could see that it is picking one file from the GCS bucket that we uploaded.

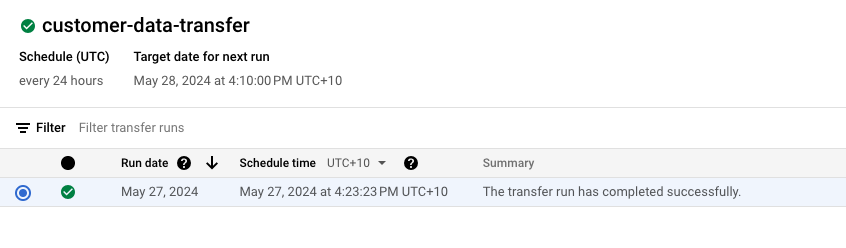

After a few seconds, the job is completed.

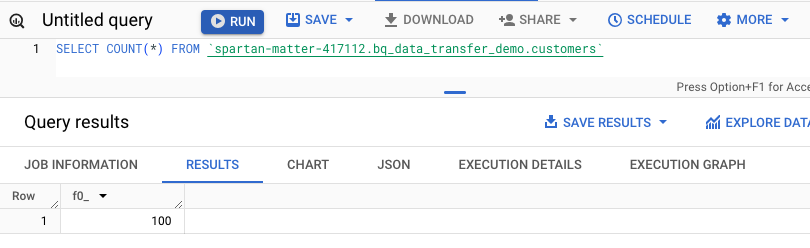

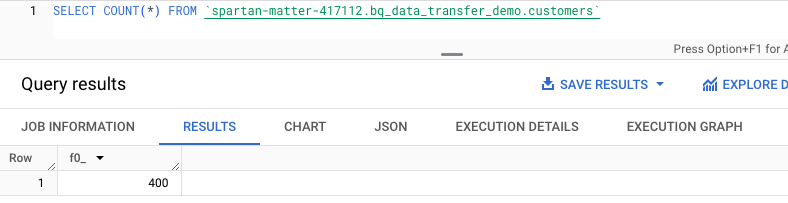

Let us check the BigQuery table to see if the 100 customers are loaded.

One thing that I noticed is that the service keeps track of the files it has processed already. So there is no need to delete any files that are processed.

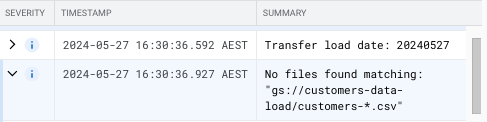

During our deployment, we wanted to make sure of this behavior since I couldn’t find it in the documentation. Let us rerun the service without placing any new files and check the logs.

The logs show that no files are matching since it has already processed the file “customers-1000-1.csv”.

Let us upload 3 new files to the GCS Bucket and rerun the service.

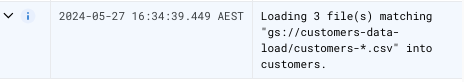

The logs from the service show that 3 files are getting picked up for processing.

After a few seconds, the job is completed

Now let us check the BigQuery table. Our first run had 100 rows. And now since we uploaded 3 new files with each having 100 customers, we need to have 400 customers in the table. The results match as expected.

Using BigQuery Data Transfer Service with Terraform

Let us now try to recreate the same BigQuery Data Transfer Service using Terraform instead of manually creating it through the console.

To make things easier, let us hardcode the values needed for the GCS Bucket, BigQuery dataset, and table. In a production scenario, we would be creating these as a part of the same project and passing them as variables instead of hardcoding them.

Create a file named “providers.tf” which would hold the GCP Provider information.

provider.tf

1

2

3

4

5

6

7

8

9

10

11

12

13

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "5.21.0"

}

}

}

provider "google" {

project = "spartan-matter-417112"

region = "us"

}

Create another file called “bqtransfer.tf” and paste the contents from below.

bqtransfer.tf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

resource "google_bigquery_data_transfer_config" "customer_data_transfer" {

display_name = "customer-data-transfer-tf"

location = "us"

data_source_id = "google_cloud_storage"

schedule = "every 24 hours"

destination_dataset_id = "bq_data_transfer_demo"

params = {

destination_table_name_template = "customers"

data_path_template = "gs://customers-data-load/customers-*.csv"

write_disposition = "APPEND"

file_format = "CSV"

skip_leading_rows = 1

}

}

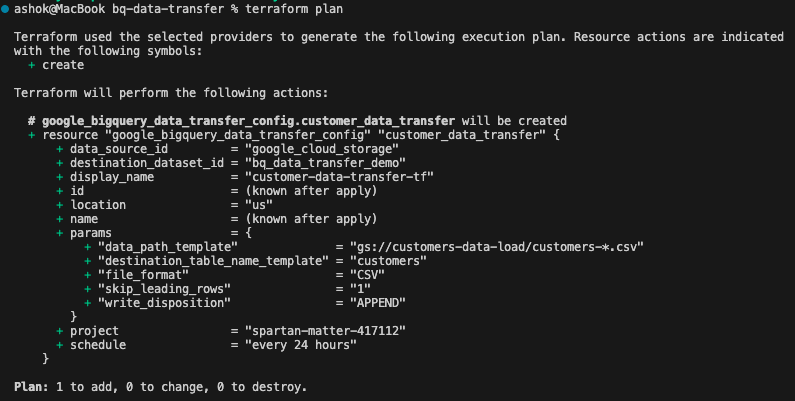

Let us run “terraform plan” command to see the plan. We could see in the plan that it would create the BigQuery Data Transfer service with the details below.

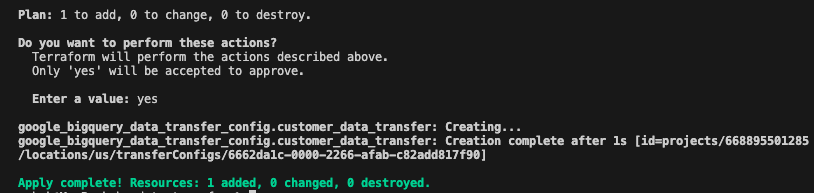

Now, we can run “terraform apply” to create this resource in GCP.

On confirming “yes”, we get the below confirmation that the resource has been created

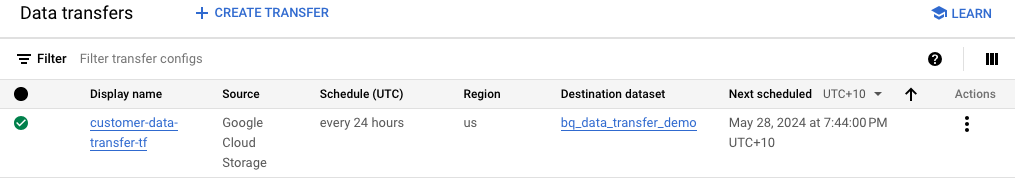

We can confirm the same by checking in the console.

Note: The documentation in terraform is not helpful and it doesn’t show all the options available. It only shows an example of scheduled queries and nothing more. I referred to the GCP documentation on Cloud Storage transfers which shows all the parameters that need to be passed and used while creating the terraform code.